9 minutes

Observability in Kubernetes with OpenTelemetry (Rust), Prometheus, Loki & Tempo

This tutorial will guide you through setting up an observability stack in Kubernetes using OpenTelemetry (Rust), Prometheus, Loki, Tempo, and Grafana.

We’ll cover:

- Metrics: Collected using OpenTelemetry and send it to Prometheus.

- Logs: Shipped via OpenTelemetry to Loki.

- Traces: Sent to Tempo for distributed tracing.

Metrics

Using OpenTelemetry to generate and push metrics to Prometheus via the prometheuswrite exporter has different trade-offs compared to using the Prometheus SDK, exposing a /metrics endpoint, and letting Prometheus scrape it.

Why use OpenTelemetry for metrics?

- Push-Based Model: Works well in environments where scraping isn’t ideal (e.g., short-lived jobs, serverless functions).

- Filtering: Drop Unnecessary Metrics: Some applications generate too many low-value metrics. You want to drop specific labels or metric types before they reach Prometheus.

- Multi-Destination Export: Send to Multiple Backends: Send metrics to multiple backends (e.g., Prometheus and Datadog/New Relic).

- Better Control of Data Flow: Allows control over when, where, and how metrics are sent.

- Temporarily Buffer Metrics Before Sending: Ensures metric retention when Prometheus is temporarily unavailable or under heavy load.

Logs

Traditionally, logs in Kubernetes are scraped using Promtail and forwarded to Loki. OpenTelemetry provides an alternative approach: instead of scraping logs, the OpenTelemetry Collector can directly receive, process, and push logs to Loki.

Why use OpenTelemetry for logs?

- Better performance: Simplifies deployment and reduces resource usage by removing Promtail.

- Better Processing Capabilities (Log Filtering, Enrichment, and Redaction): Supports log filtering, enrichment, and redaction before sending to Loki.

- Push-Based Logging Instead of Scraping (Better for Dynamic Environments): In Kubernetes, pods are ephemeral, and scraping logs (Promtail) can miss short-lived containers

- Supports Multiple Exporters (Not Just Loki): Send logs to multiple backends (e.g., Loki, Elasticsearch, S3).

Traces

OpenTelemetry is widely used for collecting traces and sending them to various backends. Here’s why traces are beneficial in Kubernetes:

- Enhanced Observability in Distributed Systems: Helps track request flows across distributed microservices in ephemeral pods.

- Faster Root Cause Analysis: Provides visibility into failures and latency issues that might be missed in logs and metrics.

- Performance Optimization and Bottleneck Detection: Kubernetes workloads can scale dynamically based on demand, making it hard to find performance bottlenecks without full visibility.

Requirements

- Kubernetes 1.22+

- Helm

Helm Charts Used

Create the namespace

we’ll install all the components in the monitoring namespace

kubectl create ns monitoring

Add the Helm repositories

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts

helm repo add grafana https://grafana.github.io/helm-charts

helm repo add prometheus-community https://prometheus-community.github.io/helm-charts

helm repo update

Install OpenTelemetry Collector

Create a values.yaml file with the following configuration:

config:

extensions:

health_check: {}

exporters:

otlphttp/tempo:

endpoint: http://tempo-gateway:80

tls:

insecure: true

prometheusremotewrite:

endpoint: http://prometheus-kube-prometheus-prometheus:9090/api/v1/write

otlphttp/loki:

endpoint: http://loki-gateway/otlp

processors:

batch: {}

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

service:

extensions:

- health_check

pipelines:

logs:

exporters:

- otlphttp/loki

- debug

processors:

- batch

receivers:

- otlp

metrics:

exporters:

- debug

- prometheusremotewrite

processors:

- batch

receivers:

- otlp

traces:

exporters:

- debug

- otlphttp/tempo

processors:

- batch

receivers:

- otlp

telemetry:

metrics:

address: ${env:MY_POD_IP}:8888

image:

repository: otel/opentelemetry-collector-contrib

mode: deployment

presets:

kubernetesAttributes:

enabled: true

Install the OpenTelemetry Collector:

helm upgrade opentelemetry-collector open-telemetry/opentelemetry-collector --values values.yaml -n monitoring --install --debug

Install Prometheus & Grafana

Create a values.yaml file:

grafana:

enabled: true

alertmanager:

enabled: false

nodeExporter:

enabled: false

kubeStateMetrics:

enabled: false

prometheus:

prometheusSpec:

enableRemoteWriteReceiver: true

Install Prometheus & Grafana:

helm upgrade prometheus prometheus-community/kube-prometheus-stack --values values.yaml -n monitoring --install --debug

Install Loki

Create a values.yaml file:

minio:

enabled: true

loki:

auth_enabled: false

schemaConfig:

configs:

- from: 2024-04-01

object_store: s3

store: tsdb

schema: v13

index:

prefix: index_

period: 24h

lokiCanary:

enabled: false

test:

enabled: false

chunksCache:

enabled: false

Install Loki:

helm upgrade loki grafana/loki --values values.yaml -n monitoring --install --debug

Install Tempo

Create a values.yaml file:

gateway:

enabled: true

ingress:

enabled: false

basicAuth:

enabled: false

metaMonitoring:

serviceMonitor:

enabled: false

traces:

otlp:

http:

enabled: true

grpc:

enabled: true

Install Tempo:

helm upgrade tempo grafana/tempo-distributed --values values.yaml --install -n monitoring --debug

For this case, I configured the resources in the most simple way, avoiding things like security, testing, HA or cache. Authentication it’s strongly recommended for production environments.

Port Forwarding

I’ll use a port forward to access the collector on http://localhost:4317, also grafana will listen on http://localhost:3000, you can use any address or port that you want just be sure the code is pointing to the right url and port.

kubectl port-forward svc/grafana 3000:80 -n monitoring

kubectl port-forward svc/opentelemetry-collector 4317:4317 -n monitoring

Rust Application

I’ll use a Rust application to demonstrate the OpenTelemetry integration, this application will generate metrics, logs and traces. You can use any other language that supports OpenTelemetry, just point to the collector url.

cargo new --bin basic-otlp

add the following dependencies

[dependencies]

opentelemetry = "0.28.0"

opentelemetry-appender-tracing = "0.28.1"

opentelemetry-otlp = { version = "0.28.0", features = ["grpc-tonic"] }

opentelemetry_sdk = "0.28.0"

tokio = { version = "1.43.0", features = ["full"] }

tracing = { version = "0.1.41", features = ["std"] }

tracing-subscriber = { version = "0.3.19", features = ["env-filter", "registry", "std", "fmt"] }

and the following code on main.rs

use opentelemetry::trace::{TraceContextExt, Tracer};

use opentelemetry::KeyValue;

use opentelemetry::{global, InstrumentationScope};

use opentelemetry_appender_tracing::layer::OpenTelemetryTracingBridge;

use opentelemetry_otlp::{LogExporter, MetricExporter, SpanExporter, WithExportConfig};

use opentelemetry_sdk::logs::SdkLoggerProvider;

use opentelemetry_sdk::metrics::SdkMeterProvider;

use opentelemetry_sdk::trace::SdkTracerProvider;

use opentelemetry_sdk::Resource;

use std::error::Error;

use std::sync::OnceLock;

use tracing::info;

use tracing_subscriber::prelude::*;

use tracing_subscriber::EnvFilter;

fn get_resource() -> Resource {

static RESOURCE: OnceLock<Resource> = OnceLock::new();

RESOURCE

.get_or_init(|| {

Resource::builder()

.with_service_name("basic-otlp-example-grpc")

.build()

})

.clone()

}

fn init_traces() -> SdkTracerProvider {

let exporter = SpanExporter::builder()

.with_tonic()

.with_endpoint("http://localhost:4317")

.build()

.expect("Failed to create span exporter");

SdkTracerProvider::builder()

.with_resource(get_resource())

.with_batch_exporter(exporter)

.build()

}

fn init_metrics() -> SdkMeterProvider {

let exporter = MetricExporter::builder()

.with_tonic()

.with_endpoint("http://localhost:4317")

.build()

.expect("Failed to create metric exporter");

SdkMeterProvider::builder()

.with_periodic_exporter(exporter)

.with_resource(get_resource())

.build()

}

fn init_logs() -> SdkLoggerProvider {

let exporter = LogExporter::builder()

.with_tonic()

.with_endpoint("http://localhost:4317")

.build()

.expect("Failed to create log exporter");

SdkLoggerProvider::builder()

.with_resource(get_resource())

.with_batch_exporter(exporter)

.build()

}

#[tokio::main]

async fn main() -> Result<(), Box<dyn Error + Send + Sync + 'static>> {

let logger_provider = init_logs();

// Create a new OpenTelemetryTracingBridge using the above LoggerProvider.

let otel_layer = OpenTelemetryTracingBridge::new(&logger_provider);

// For the OpenTelemetry layer, add a tracing filter to filter events from

// OpenTelemetry and its dependent crates (opentelemetry-otlp uses crates

// like reqwest/tonic etc.) from being sent back to OTel itself, thus

// preventing infinite telemetry generation. The filter levels are set as

// follows:

// - Allow `info` level and above by default.

// - Restrict `opentelemetry`, `hyper`, `tonic`, and `reqwest` completely.

// Note: This will also drop events from crates like `tonic` etc. even when

// they are used outside the OTLP Exporter. For more details, see:

// https://github.com/open-telemetry/opentelemetry-rust/issues/761

let filter_otel = EnvFilter::new("info")

.add_directive("hyper=off".parse().unwrap())

.add_directive("opentelemetry=off".parse().unwrap())

.add_directive("tonic=off".parse().unwrap())

.add_directive("h2=off".parse().unwrap())

.add_directive("reqwest=off".parse().unwrap());

let otel_layer = otel_layer.with_filter(filter_otel);

// Create a new tracing::Fmt layer to print the logs to stdout. It has a

// default filter of `info` level and above, and `debug` and above for logs

// from OpenTelemetry crates. The filter levels can be customized as needed.

let filter_fmt = EnvFilter::new("info").add_directive("opentelemetry=debug".parse().unwrap());

let fmt_layer = tracing_subscriber::fmt::layer()

.with_thread_names(true)

.with_filter(filter_fmt);

// Initialize the tracing subscriber with the OpenTelemetry layer and the

// Fmt layer.

tracing_subscriber::registry()

.with(otel_layer)

.with(fmt_layer)

.init();

// At this point Logs (OTel Logs and Fmt Logs) are initialized, which will

// allow internal-logs from Tracing/Metrics initializer to be captured.

let tracer_provider = init_traces();

// Set the global tracer provider using a clone of the tracer_provider.

// Setting global tracer provider is required if other parts of the application

// uses global::tracer() or global::tracer_with_version() to get a tracer.

// Cloning simply creates a new reference to the same tracer provider. It is

// important to hold on to the tracer_provider here, so as to invoke

// shutdown on it when application ends.

global::set_tracer_provider(tracer_provider.clone());

let meter_provider = init_metrics();

// Set the global meter provider using a clone of the meter_provider.

// Setting global meter provider is required if other parts of the application

// uses global::meter() or global::meter_with_version() to get a meter.

// Cloning simply creates a new reference to the same meter provider. It is

// important to hold on to the meter_provider here, so as to invoke

// shutdown on it when application ends.

global::set_meter_provider(meter_provider.clone());

let common_scope_attributes = vec![KeyValue::new("scope-key", "scope-value")];

let scope = InstrumentationScope::builder("basic")

.with_version("1.0")

.with_attributes(common_scope_attributes)

.build();

let tracer = global::tracer_with_scope(scope.clone());

let meter = global::meter_with_scope(scope);

let counter = meter

.u64_counter("test_counter")

.with_description("a simple counter for demo purposes.")

.with_unit("my_unit")

.build();

for _ in 0..10 {

counter.add(1, &[KeyValue::new("test_key", "test_value")]);

}

tracer.in_span("Main operation", |cx| {

let span = cx.span();

span.add_event(

"Nice operation!".to_string(),

vec![KeyValue::new("bogons", 100)],

);

span.set_attribute(KeyValue::new("another.key", "yes"));

info!(name: "my-event-inside-span", target: "my-target", "hello from {}. My price is {}. I am also inside a Span!", "banana", 2.99);

tracer.in_span("Sub operation...", |cx| {

let span = cx.span();

span.set_attribute(KeyValue::new("another.key", "yes"));

span.add_event("Sub span event", vec![]);

});

});

info!(name: "my-event", target: "my-target", "hello from {}. My price is {}", "apple", 1.99);

tracer_provider.shutdown()?;

meter_provider.shutdown()?;

logger_provider.shutdown()?;

Ok(())

}

run a cargo run command and if you see the followings logs you should be good to go

2025-02-26T15:22:04.038040Z DEBUG main opentelemetry-otlp: name="TracesTonicChannelBuilding"

2025-02-26T15:22:04.038174Z DEBUG main opentelemetry-otlp: name="TonicChannelBuilt" endpoint="http://localhost:4317" timeout_in_millisecs=10000 compression="None" headers="[]"

2025-02-26T15:22:04.038215Z DEBUG main opentelemetry-otlp: name="TonicsTracesClientBuilt"

2025-02-26T15:22:04.038238Z DEBUG main opentelemetry-otlp: name="SpanExporterBuilt"

2025-02-26T15:22:04.038734Z DEBUG main opentelemetry-otlp: name="MetricsTonicChannelBuilding"

2025-02-26T15:22:04.038777Z INFO OpenTelemetry.Traces.BatchProcessor opentelemetry_sdk: name="BatchSpanProcessor.ThreadStarted" interval_in_millisecs=5000 max_export_batch_size=512 max_queue_size=2048

2025-02-26T15:22:04.038870Z DEBUG main opentelemetry-otlp: name="TonicChannelBuilt" endpoint="http://localhost:4317" timeout_in_millisecs=10000 compression="None" headers="[]"

2025-02-26T15:22:04.038910Z DEBUG main opentelemetry-otlp: name="TonicsMetricsClientBuilt"

2025-02-26T15:22:04.038925Z DEBUG main opentelemetry-otlp: name="MetricExporterBuilt"

2025-02-26T15:22:04.039019Z DEBUG main opentelemetry_sdk: name="MeterProvider.Building" builder="MeterProviderBuilder { resource: Some(Resource { inner: ResourceInner { attrs: {Static(\"telemetry.sdk.language\"): String(Static(\"rust\")), Static(\"service.name\"): String(Static(\"basic-otlp-example-grpc\")), Static(\"telemetry.sdk.version\"): String(Static(\"0.28.0\")), Static(\"telemetry.sdk.name\"): String(Static(\"opentelemetry\"))}, schema_url: None } }), readers: [PeriodicReader], views: 0 }"

2025-02-26T15:22:04.039078Z INFO OpenTelemetry.Metrics.PeriodicReader opentelemetry_sdk: name="PeriodReaderThreadStarted" interval_in_millisecs=60000

2025-02-26T15:22:04.039085Z INFO main opentelemetry_sdk: name="MeterProvider.Built"

2025-02-26T15:22:04.039110Z DEBUG OpenTelemetry.Metrics.PeriodicReader opentelemetry_sdk: name="PeriodReaderThreadLoopAlive" Next export will happen after interval, unless flush or shutdown is triggered. interval_in_millisecs=60000

2025-02-26T15:22:04.039116Z INFO main opentelemetry: name="MeterProvider.GlobalSet" Global meter provider is set. Meters can now be created using global::meter() or global::meter_with_scope().

2025-02-26T15:22:04.039176Z DEBUG main opentelemetry_sdk: name="MeterProvider.NewMeterCreated" meter_name="basic"

2025-02-26T15:22:04.039253Z DEBUG main opentelemetry_sdk: name="InstrumentCreated" instrument_name="test_counter"

2025-02-26T15:22:04.039366Z INFO main my-target: hello from banana. My price is 2.99. I am also inside a Span!

2025-02-26T15:22:04.039416Z INFO main my-target: hello from apple. My price is 1.99

2025-02-26T15:22:04.039457Z DEBUG OpenTelemetry.Traces.BatchProcessor opentelemetry_sdk: name="BatchSpanProcessor.ExportingDueToShutdown"

2025-02-26T15:22:04.039551Z DEBUG OpenTelemetry.Traces.BatchProcessor opentelemetry-otlp: name="TonicsTracesClient.CallingExport"

2025-02-26T15:22:04.492377Z DEBUG OpenTelemetry.Traces.BatchProcessor opentelemetry_sdk: name="BatchSpanProcessor.ThreadExiting" reason="ShutdownRequested"

2025-02-26T15:22:04.492505Z INFO OpenTelemetry.Traces.BatchProcessor opentelemetry_sdk: name="BatchSpanProcessor.ThreadStopped"

2025-02-26T15:22:04.492934Z INFO main opentelemetry_sdk: name="MeterProvider.Shutdown" User initiated shutdown of MeterProvider.

2025-02-26T15:22:04.493158Z DEBUG OpenTelemetry.Metrics.PeriodicReader opentelemetry_sdk: name="PeriodReaderThreadExportingDueToShutdown"

2025-02-26T15:22:04.493304Z DEBUG OpenTelemetry.Metrics.PeriodicReader opentelemetry_sdk: name="MeterProviderInvokingObservableCallbacks" count=0

2025-02-26T15:22:04.493481Z DEBUG OpenTelemetry.Metrics.PeriodicReader opentelemetry_sdk: name="PeriodicReaderMetricsCollected" count=1 time_taken_in_millis=0

2025-02-26T15:22:04.493632Z DEBUG OpenTelemetry.Metrics.PeriodicReader opentelemetry-otlp: name="TonicsMetricsClient.CallingExport"

2025-02-26T15:22:04.945131Z DEBUG OpenTelemetry.Metrics.PeriodicReader opentelemetry_sdk: name="PeriodReaderInvokedExport" export_result="Ok(())"

2025-02-26T15:22:04.945207Z DEBUG OpenTelemetry.Metrics.PeriodicReader opentelemetry_sdk: name="PeriodReaderInvokedExporterShutdown" shutdown_result="Ok(())"

2025-02-26T15:22:04.945249Z DEBUG OpenTelemetry.Metrics.PeriodicReader opentelemetry_sdk: name="PeriodReaderThreadExiting" reason="ShutdownRequested"

2025-02-26T15:22:04.945269Z DEBUG main opentelemetry_sdk: name="LoggerProvider.ShutdownInvokedByUser"

2025-02-26T15:22:04.945275Z INFO OpenTelemetry.Metrics.PeriodicReader opentelemetry_sdk: name="PeriodReaderThreadStopped"

2025-02-26T15:22:04.945336Z DEBUG OpenTelemetry.Logs.BatchProcessor opentelemetry_sdk: name="BatchLogProcessor.ExportingDueToShutdown"

2025-02-26T15:22:04.945442Z DEBUG OpenTelemetry.Logs.BatchProcessor opentelemetry-otlp: name="TonicsLogsClient.CallingExport"

2025-02-26T15:22:05.408621Z DEBUG OpenTelemetry.Logs.BatchProcessor opentelemetry_sdk: name="BatchLogProcessor.ThreadExiting" reason="ShutdownRequested"

2025-02-26T15:22:05.408835Z INFO OpenTelemetry.Logs.BatchProcessor opentelemetry_sdk: name="BatchLogProcessor.ThreadStopped"

Now go to grafana and using the explorer option check out the traces, logs and metrics.

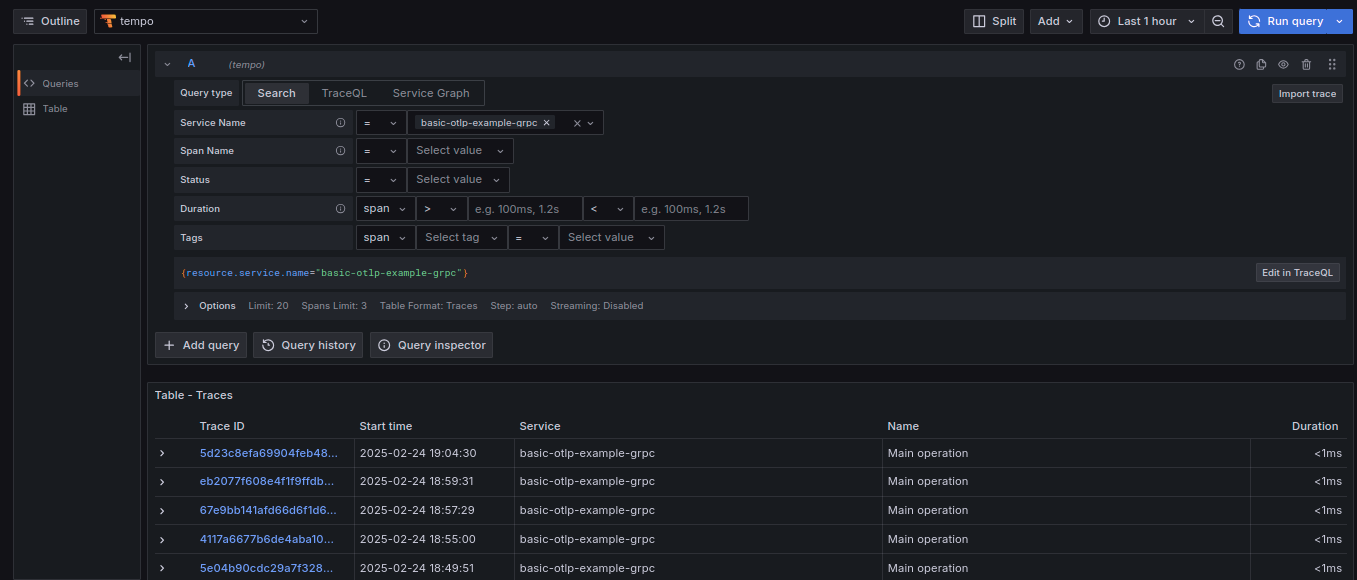

Traces

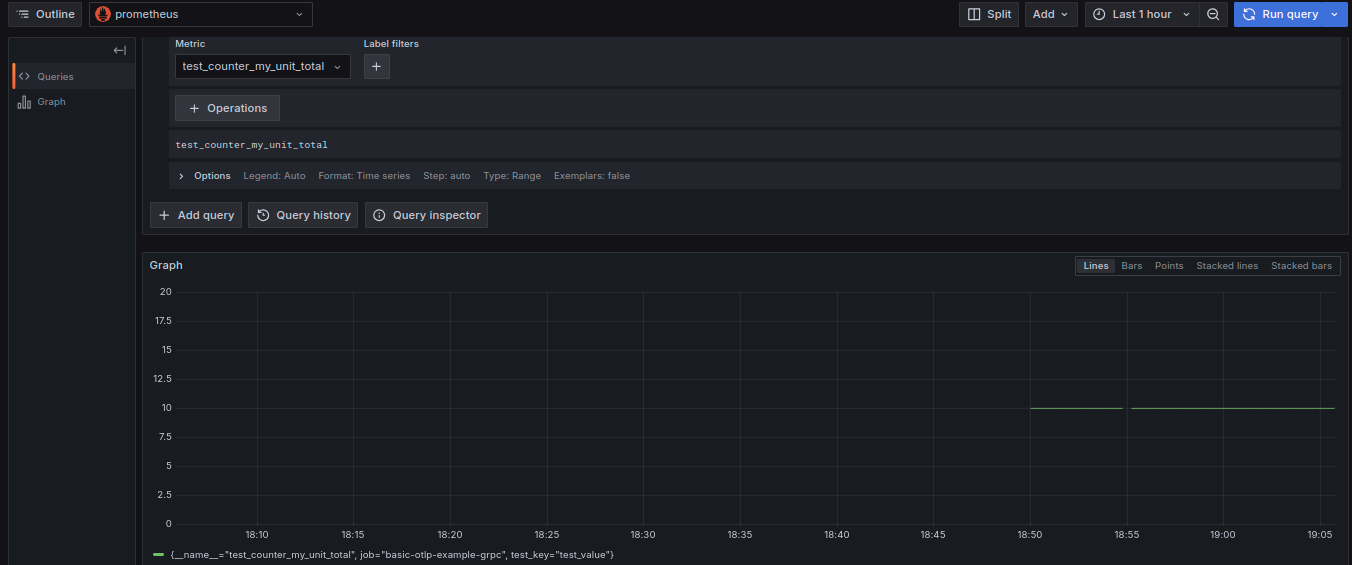

Metrics

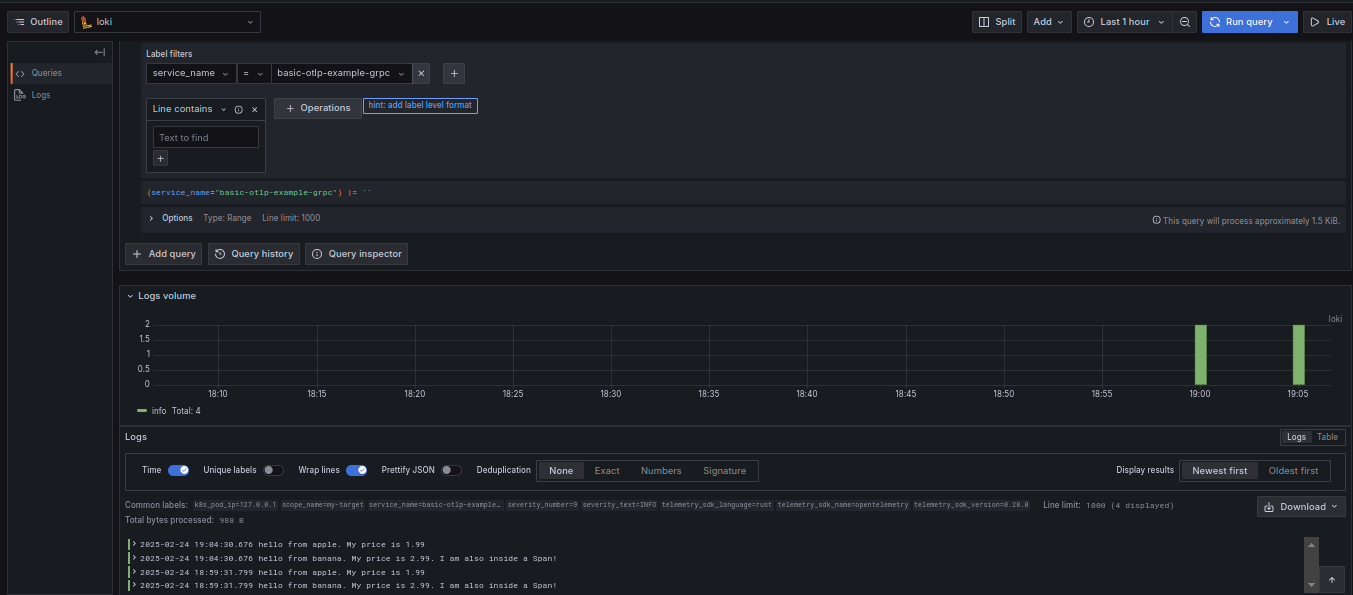

Logs

References

- https://github.com/grafana/loki/issues/14238

- https://github.com/grafana/helm-charts/issues/3086

- https://grafana.com/docs/loki/latest/setup/upgrade/#loki-300

- https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/exporter/lokiexporter/README.md

- https://github.com/open-telemetry/opentelemetry-collector/blob/main/exporter/debugexporter/README.md

- https://github.com/open-telemetry/opentelemetry-collector-contrib/blob/main/exporter/prometheusremotewriteexporter/README.md

- https://opentelemetry.io/docs/languages/js/exporters/#otlp-dependencies

- https://github.com/open-telemetry/opentelemetry-collector-contrib/discussions/31415

kubernetes devops opentelemetry prometheus loki tempo grafana metrics logs traces

1747 Words

2025-02-26 00:00 +0000 (Last updated: 2025-06-26 23:15 +0000)

7581681 @ 2025-06-26